NVIDIA has introduced NeMo Guardrails, an open-source software that assists developers in ensuring safety and security in AI chatbots. NeMo Guardrails enables the generation of accurate, appropriate, and secure text responses while staying within specific domains.

Enhancing Safety and Security: NeMo Guardrails allows developers to establish three types of guardrails:

- Topical guardrails: Prevent chatbots from providing responses outside the desired areas of expertise.

- Safety guardrails: Ensure that chatbots respond with accurate information, filtering out inappropriate language and relying on credible sources.

- Security guardrails: Restrict chatbots to establish connections only with trusted third-party applications.

Read their blog for more information.

Meanwhile, Microsoft releases “A Bible for Language Models”.

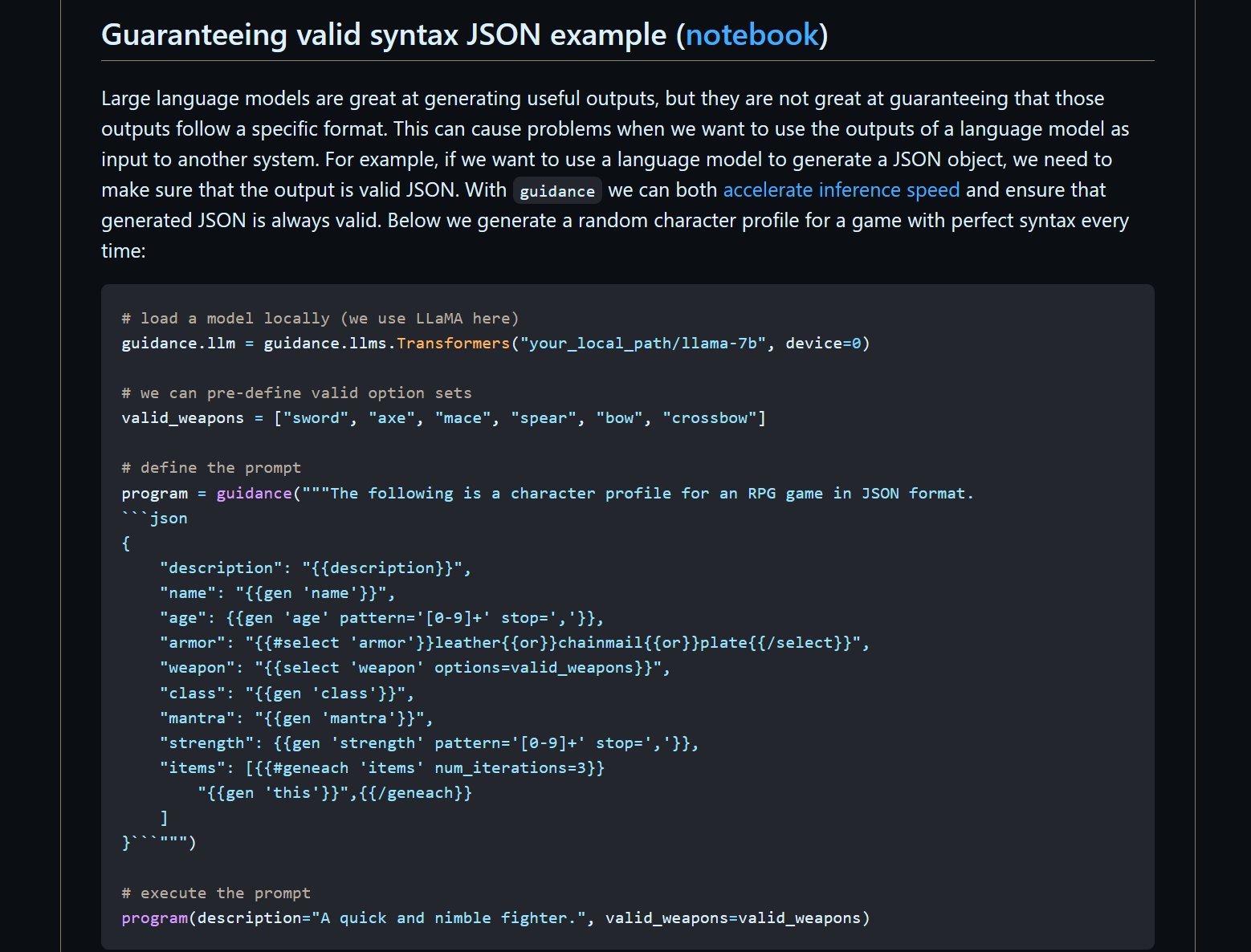

In a groundbreaking announcement, Microsoft has released Guidance (github), a guidance language that promises to revolutionize the control of large language models (LLMs). This powerful tool empowers users to shape and mold language models to their will, unlocking a new level of control and efficiency. With its simple yet comprehensive syntax, Guidance provides a seamless way to architect complex LLM workflows and achieve superior performance compared to traditional prompting or chaining methods. Let’s dive into the details and explore the remarkable features that make Guidance the Bible for language models.

Guidance’s key feature lies in its ability to merge generation, prompting, and logical control into a single continuous flow. By aligning with the inherent text-processing mechanism of language models, users can achieve precise output structures with heightened accuracy and transparency. The language model processing order is mirrored in the linear execution order of guidance programs, enabling users to leverage the model’s capabilities at any stage of execution. With variable interpolation and logical control, users can create dynamic and versatile language model workflows, resulting in easily parsed and accurate results.

One of the remarkable advantages of Guidance is its ability to streamline the output structure through frameworks like the Chain of Thought (COT) and its variations such as ART and Auto-CoT. These structures have been empirically proven to enhance language model performance, and with the introduction of advanced LLMs like GPT-4, the possibilities for richer structures are endless. Guidance serves as a facilitator, allowing users to achieve intricate structures with ease and cost-effectiveness.

Let’s explore some of the key features of Guidance that enable greater control over LLMs:

1. Simple and Intuitive Syntax: Inspired by Handlebars templating, Guidance offers a user-friendly syntax that makes it easy to get started. Users can perform variable interpolation and exercise logical control, similar to conventional templating languages.

2. Rich Output Structures: Guidance provides an extensive range of output structures, including multiple generations, selections, conditionals, and tool utilization. This empowers users to unleash their creativity and build impressive language model workflows.

3. Playground-like Streaming Experience: Within Jupyter/VSCode Notebooks, users can experience a playground-like environment for experimentation. This allows for seamless testing and refining of language model workflows.

4. Intelligent Generation Caching: Guidance implements intelligent generation caching based on seed-based techniques, optimizing the generation process for improved efficiency.

5. Support for Role-Based Chat Models: With support for role-based chat models like ChatGPT, users can engage in interactive and dynamic conversations, expanding the possibilities for language model applications.

Guidance’s integration with HuggingFace models further enhances its capabilities. From guidance acceleration to token healing and regex pattern guides, users can tap into a wide range of features and easily integrate Guidance into their existing models.

Microsoft’s commitment to advancing language models is evident through their recent research breakthroughs. Researchers from Microsoft unveiled automatic prompt optimization (APO), a framework for optimizing LLM prompts, and open-source tools and datasets for auditing AI-powered content moderation systems. These developments contribute to the ongoing effort of making language models more reliable and efficient.