OpenAI Whisper is a powerful automatic speech recognition system that can transcribe speech across dozens of languages and handle poor audio quality and technical language. Its multitasking and multilingual capabilities allow it to perform speech translation and language identification, making it a versatile tool for various applications. Whisper’s use of a transformer sequence-to-sequence model and large and diverse dataset results in improved accuracy and robustness to accents and background noise. Although it may require more technical knowledge to install and use, Whisper is an exciting advancement in speech-to-text technology.

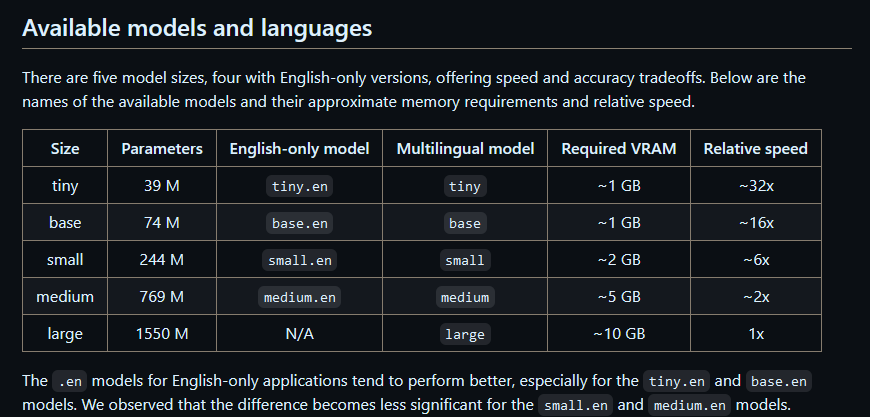

How to set up and use Whisper, a Python package for transcribing speech in audio files? To use the package, you will need Python 3.8-3.10, PyTorch, and a few Python packages, including HuggingFace Transformers and ffmpeg-python. You can download and install Whisper using the “pip install” command or by pulling the latest commit from the repository. You will also need to install ffmpeg using your system’s package manager.

Once you have set up Whisper, you can transcribe speech in audio files using the command-line interface or within Python. The command-line interface allows you to specify the model, language, and task. You can also use the detect_language() and decode() functions within Python for lower-level access to the model.